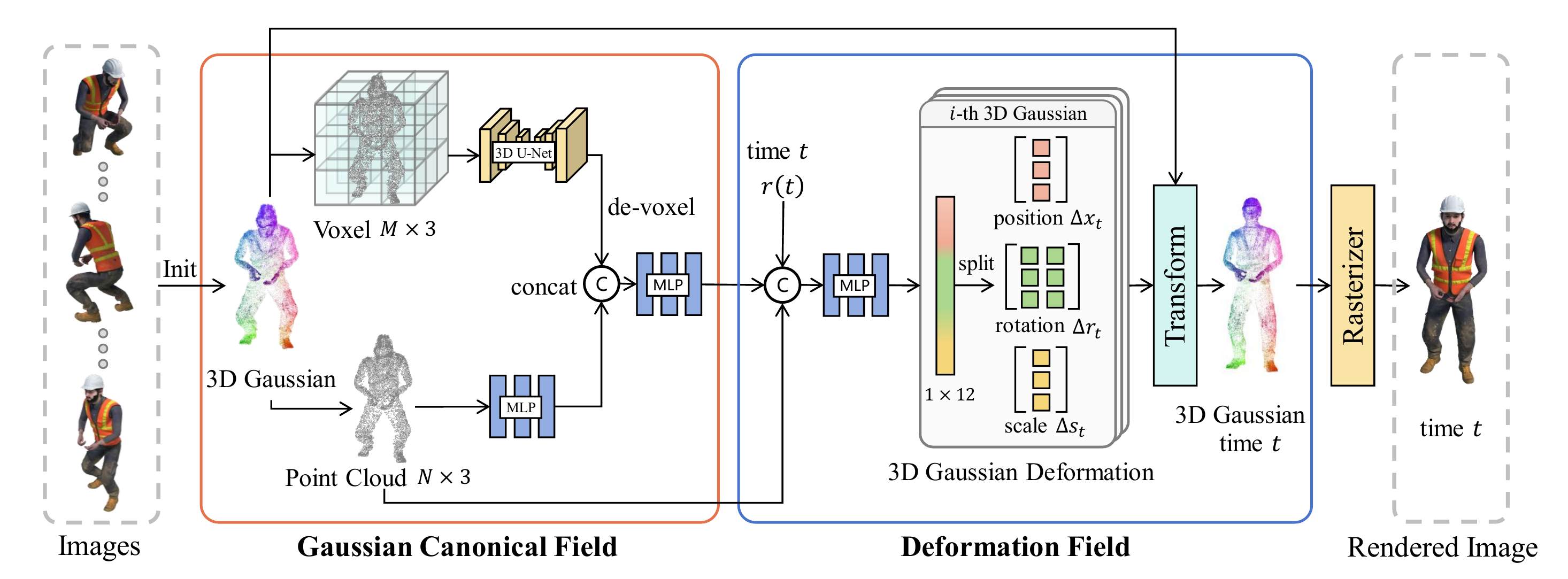

Our Architecture

The pipeline of our proposed 3D geometry-aware deformable Gaussian splitting. In the Gaussian canonical field, we reconstruct a static scene in canonical space using 3D Gaussian distributions. We extract positional features using a MLP, as well as local geometric features using a 3D U-Net, fused by another MLP to form the geometry-aware features. In the deformation field, taking the geometry-aware features and timestamp t, a MLP estimates the 3D Gaussian deformation, which transfers the canonical 3D Gaussian distributions to timestamp $t$. Finally, a rasterizer renders the transformed 3D Gaussian to images.