Abstract

The task of semi-supervised video object segmentation (VOS) has been greatly advanced and state-of-the-art performance has been made by dense matching-based methods.

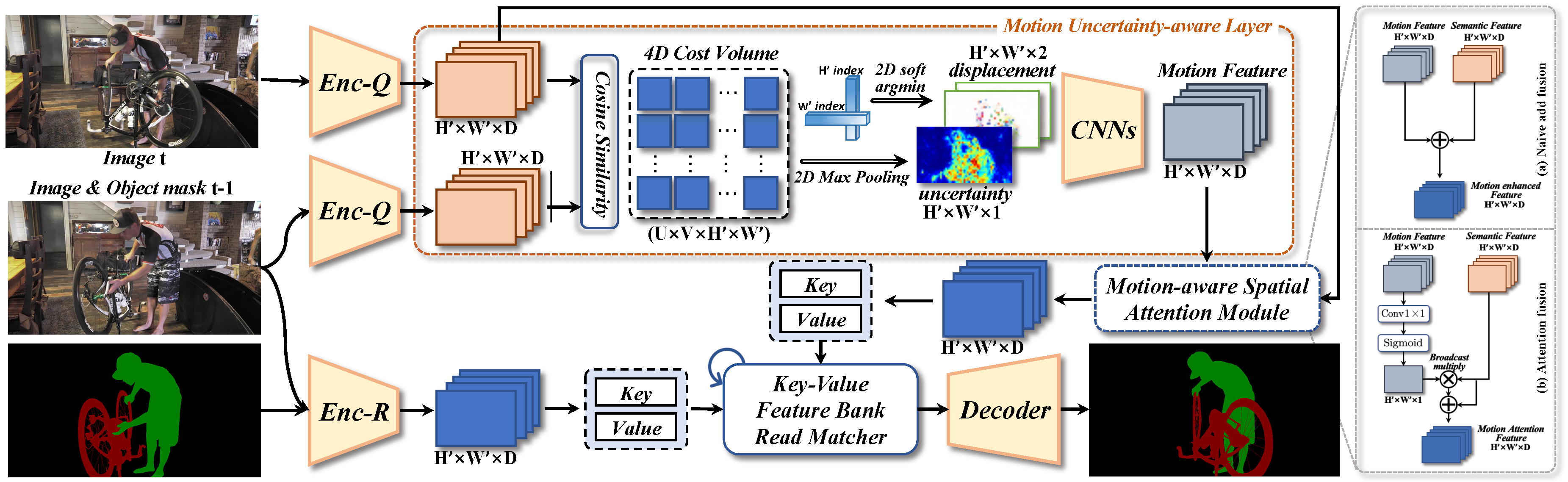

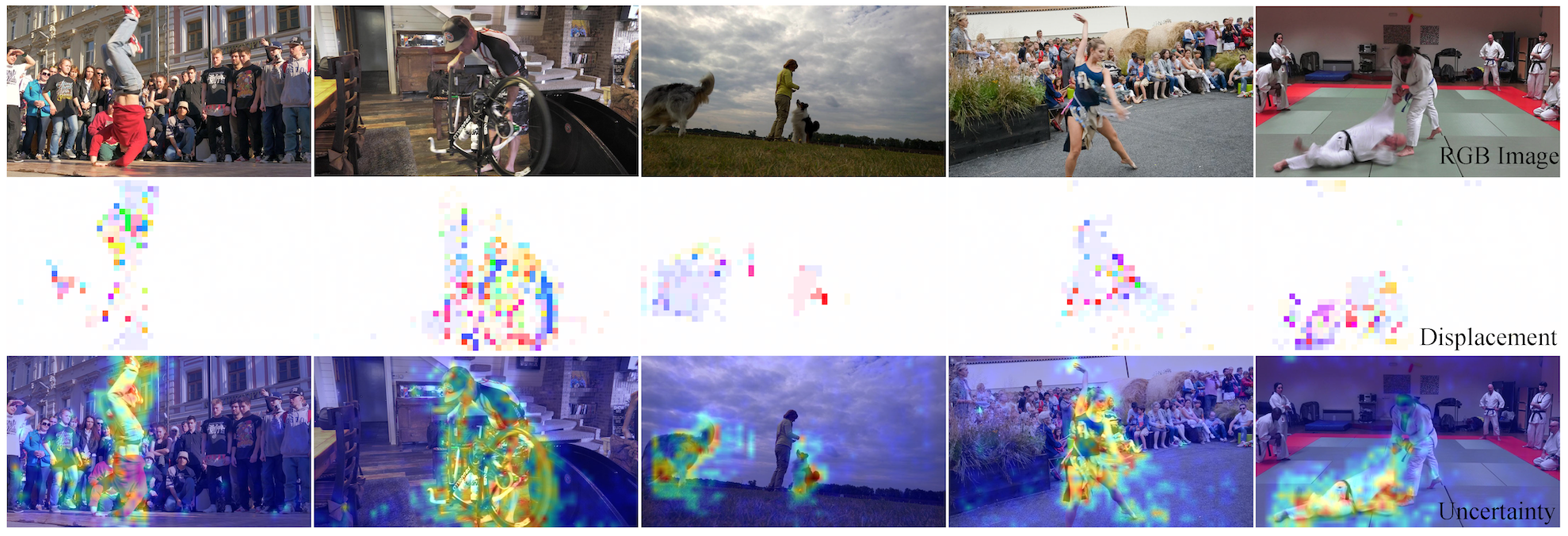

The recent methods leverage space-time memory (STM) networks and learn to retrieve relevant information from all available sources, where the past frames with object masks form an external memory and the current frame as the query is segmented using the mask information in the memory. However, when forming the memory and performing matching, these methods only exploit the appearance information while ignoring the motion information. In this paper, we advocate the return of the motion information and propose a motion uncertainty-aware framework (MUNet) for semi-supervised VOS. First, we propose an implicit method to learn the spatial correspondences between neighboring frames, building upon a correlation cost volume.

To handle the challenging cases of occlusion and textureless regions during constructing dense correspondences, we incorporate the uncertainty in dense matching and achieve motion uncertainty-aware feature representation. Second, we introduce a motion-aware spatial attention module to effectively fuse the motion feature with the semantic feature.

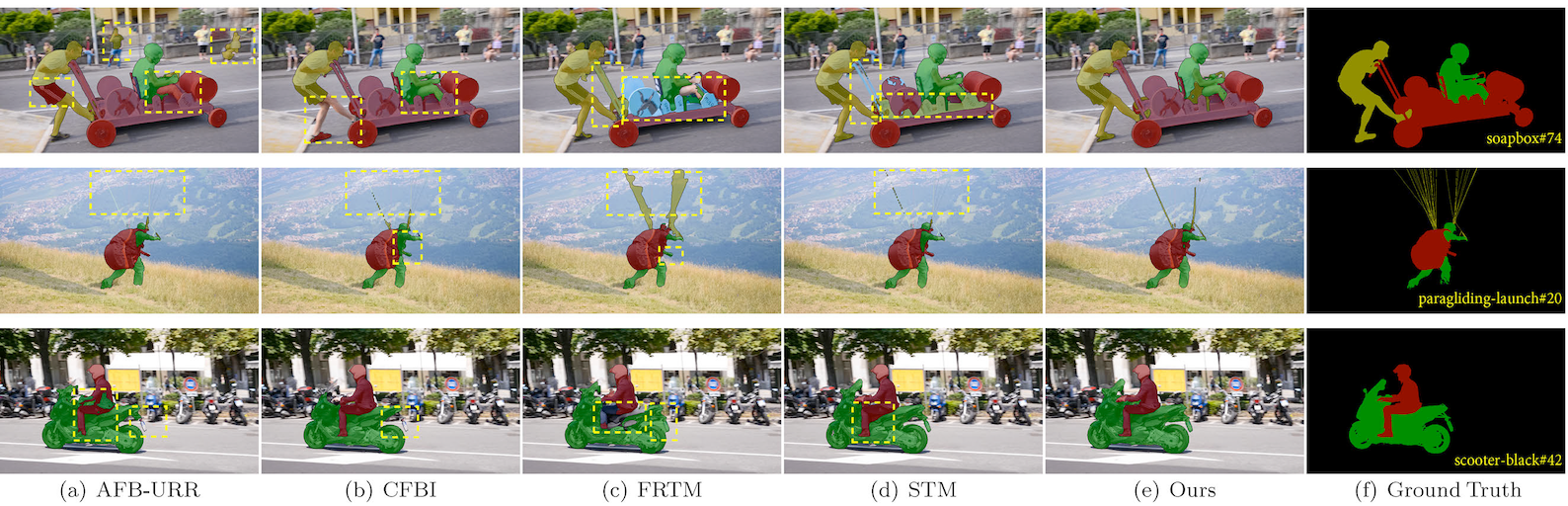

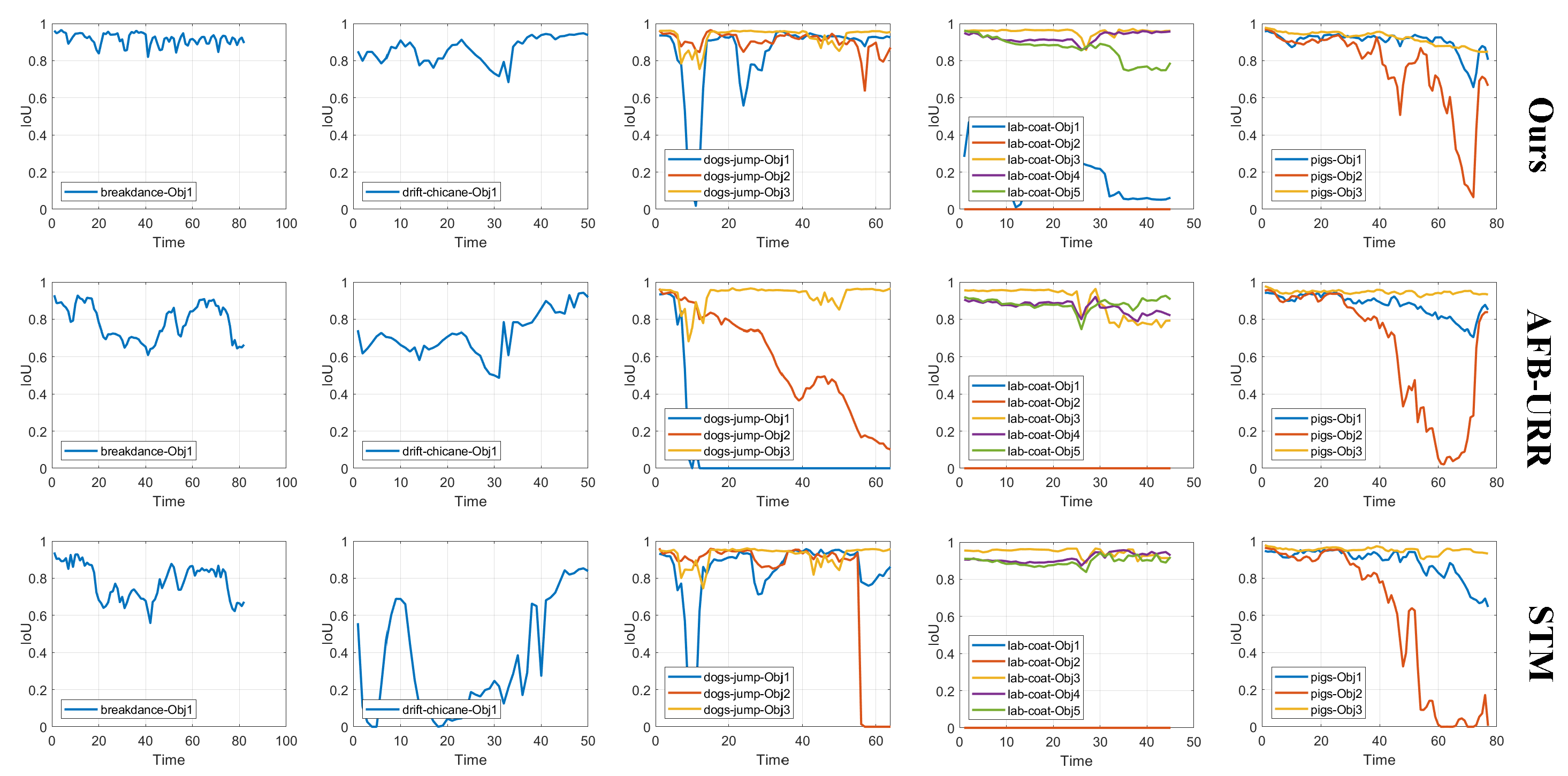

Comprehensive experiments on challenging benchmarks show that using a small amount of data and combining it with powerful motion information can bring a significant performance boost. We achieve 76.5% J&F only using DAVIS17 for training, which significantly outperforms the SOTA methods under the low-data protocol.