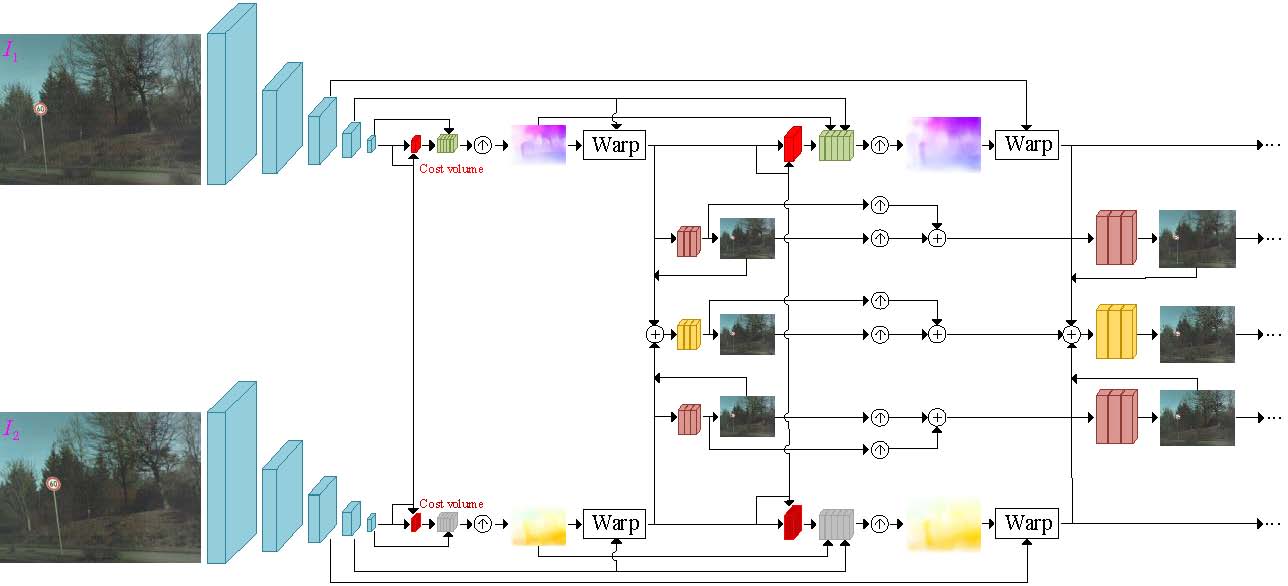

Network Architecture

Our pipeline mainly consists of two sub-networks: a PWC-based undistortion flow estimator and a time-centered GS image decoder. We only show the RS correction modules at the top two levels. For the rest of the pyramidal levels (excluding the first two layers), the overall RS correction modules have a similar structure as the second to the top level. Note that only the second to fifth pyramid features are warped, following a tailored correlation GS image decoder. Our network is designed symmetrically to aggregate two consecutive RS images in a coarse-to-fine manner. The symmetric convolutional layers of the same color share the same weights.